Documents show that the Australian Federal Police (AFP) used Clearview AI — a controversial facial recognition technology now under federal investigation.

At least one officer tested the software during a free trial, using images of herself and another staff member. In another case, staff from the Australian Centre to Counter Child Exploitation (ACCE) ran searches on five “persons of interest.”

Emails released under Freedom of Information laws reveal that one officer even installed the app on their personal phone without seeking information security approval.

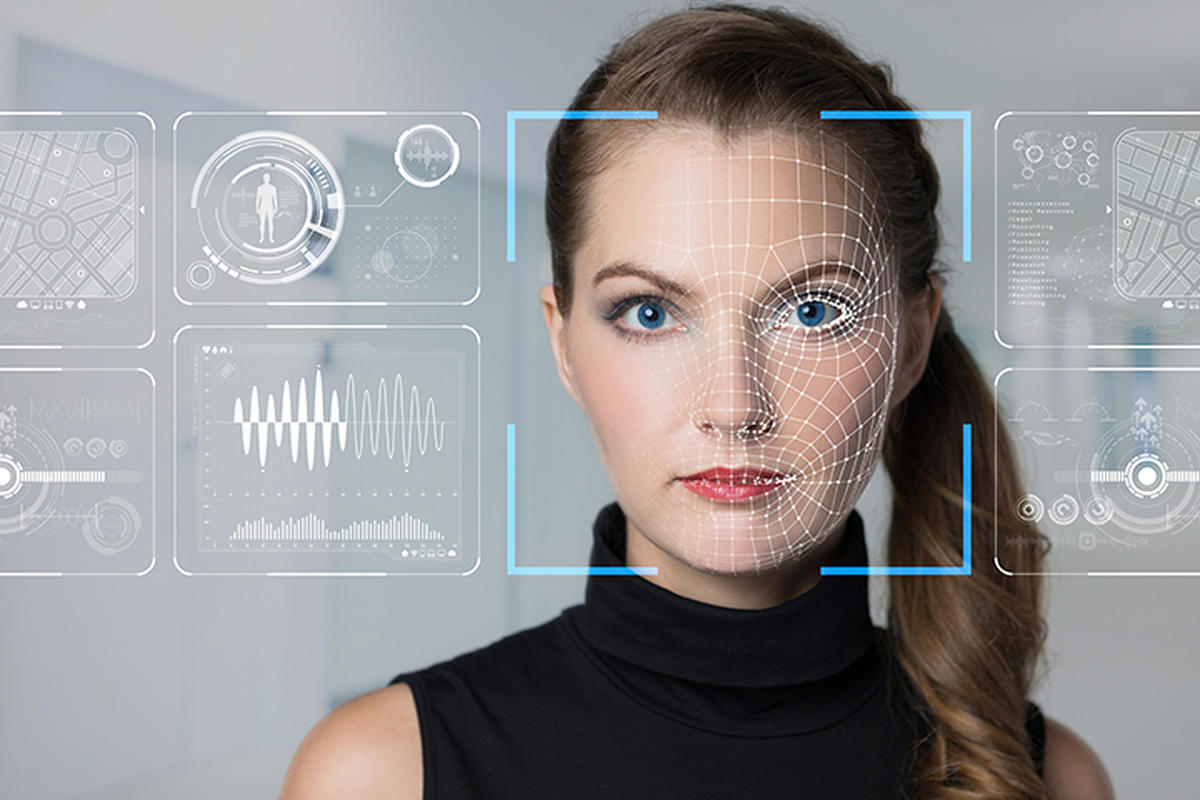

Clearview AI, a New York-based company, created a tool that searches faces across billions of photos scraped from platforms like Facebook and Instagram, without user consent. The New York Times exposed this data collection in January, sparking global outrage.

Initially, the AFP denied any involvement with Clearview AI. Later, they admitted that officers had tested the software. A spokesperson explained that they conducted a “limited pilot” to evaluate its potential in fighting child exploitation and abuse. She declined to answer whether the trial had proper approval.

Last week, the Office of the Australian Information Commissioner (OAIC) launched an investigation into Clearview’s use of scraped data and biometrics, in collaboration with the UK’s Information Commissioner’s Office (ICO).

Despite AFP’s April acknowledgment of the trial, the extent of use remained unclear. The AFP confirmed no formal contract was signed. Opposition leaders criticized the lack of oversight, calling the use of private services for official investigations “deeply concerning.”

Newly released documents show AFP officers accessed Clearview AI starting in November 2019. They tested the tool using images of staff and “persons of interest.” The agency later reported that the software helped locate one suspected victim of imminent sexual assault. They claim no Australian personal information was retrieved.

Internal emails show that some officers questioned the lack of approval. In December 2019, one asked if information security had raised concerns. Another replied, “We haven’t even gone down that path yet,” admitting they were running the app on their personal phone.

By January 2020, as media reports intensified, staff began voicing unease. “There should be no software used without proper clearance,” one employee warned. Others joked about the AFP publicly denying use of the tool while they were actively using it.

The AFP ordered all officers to stop using Clearview AI on January 22, 2020 — four days after the New York Times story broke.

Clearview AI’s founder, Australian businessman Hoan Ton-That, personally emailed an AFP officer in December 2019, asking for feedback. Shortly after, an AFP officer reported that the software successfully matched a mugshot to a suspect’s Instagram account, praising its accuracy.

Ton-That stated that Clearview complies with applicable laws and will cooperate with investigations by the OAIC and ICO. The company has since suspended operations in Australia and the UK.